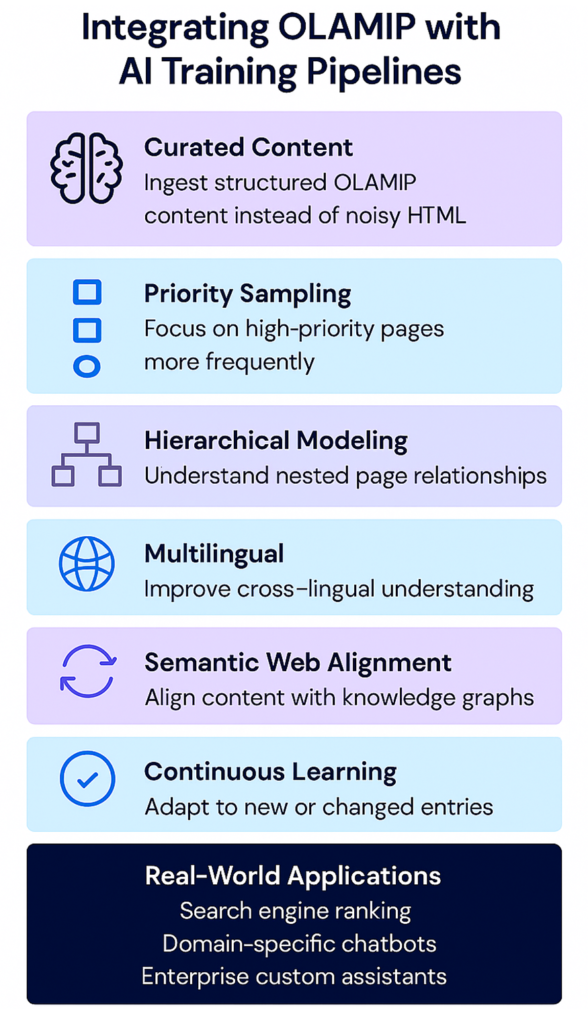

Integrating OLAMIP with AI training pipelines enables models to learn from curated, structured web content instead of noisy HTML, improving accuracy, relevance, and efficiency.

Here’s how this integration works:

1. Direct Ingestion of OLAMIP Files

Training pipelines can ingest /olamip.json files as structured datasets:

Each entry provides a clean summary, tags, and metadata.

No need for scraping or parsing HTML because the content is already optimized for LLMs.

Why it matters: This dramatically reduces preprocessing time and improves the quality of training inputs.

2. Priority-Based Sampling

The priority field helps pipelines decide what to learn from:

High-priority pages (e.g. product pages, cornerstone articles) are sampled more frequently.

Medium and Low entries are used for generalization or background knowledge.

Why it matters: Models focus on the most relevant and authoritative content first.

3. Multilingual Fine-Tuning

With multilingual summaries, pipelines can:

Train models on parallel text across languages.

Improve cross-lingual understanding and translation capabilities.

Why it matters: Supports global deployment and reduces English-centric bias.

4. Hierarchical Context Modeling

Nested children structures allow models to:

Understand relationships between pages (e.g. category → product → review).

Learn how information flows across a site.

Why it matters: Enables better summarization, navigation, and contextual reasoning.

5. Semantic Web Alignment

The semantic field links to schema.org types or RDF resources:

Models can align OLAMIP entries with structured knowledge graphs.

Improves entity linking, disambiguation, and fact-checking.

Why it matters: Boosts accuracy and explainability in downstream tasks.

6. Continuous Learning

Websites can update OLAMIP files regularly:

- Pipelines ingest deltas (new or changed entries).

- Models stay current without full retraining.

Delta Updates for Scalable AI Refresh Cycles to support continuous learning at scale, OLAMIP introduces an optional companion file: olamip-delta.json. This file contains only the changes since the last full snapshot, including new pages, updated summaries, and removed URLs. AI systems can fetch these lightweight deltas to stay synchronized with your evolving content without reprocessing the entire OLAMIP dataset. The main olamip.json remains the authoritative, fully updated snapshot of your site, while olamip-delta.json provides efficient incremental updates.

Why it matters: Enables real-time adaptation and keeps AI systems aligned with evolving content.

Real-World Applications

Search engines can use OLAMIP to train ranking models with better semantic signals.

Chatbots can fine-tune on OLAMIP summaries for domain-specific expertise.

Enterprise AI can ingest OLAMIP from internal portals to train custom assistants.

To complement the high‑level overview provided on this page, a dedicated technical guide is available for teams implementing Retrieval‑Augmented Generation (RAG) systems. The companion page, “Using OLAMIP in RAG Pipelines,” provides a deeper breakdown of how OLAMIP entries can be transformed into retrieval‑ready chunks, embedded with metadata‑aware strategies, indexed in vector databases, and used to power domain‑specific assistants. It includes architecture diagrams, chunking methodologies, retrieval scoring models, and example code demonstrating how OLAMIP integrates directly into modern RAG workflows. This separation ensures that the AI Pipeline Integration page remains conceptual, while the RAG guide offers the full implementation details for engineering teams.